DeepMind's Aletheia: Dawn of Autonomous AI Researchers

Most AI headlines are noise. This one isn’t.

DeepMind’s Aletheia isn’t impressive because it got a high score — it’s impressive because it models a workflow that resembles real research operations: generate ideas, challenge them, revise under pressure, and only then produce a final output. That is exactly the jump we need if AI is going to be useful for engineers, analysts, and product teams working with real stakes.

Why This Is Different From “Smart Prompting”

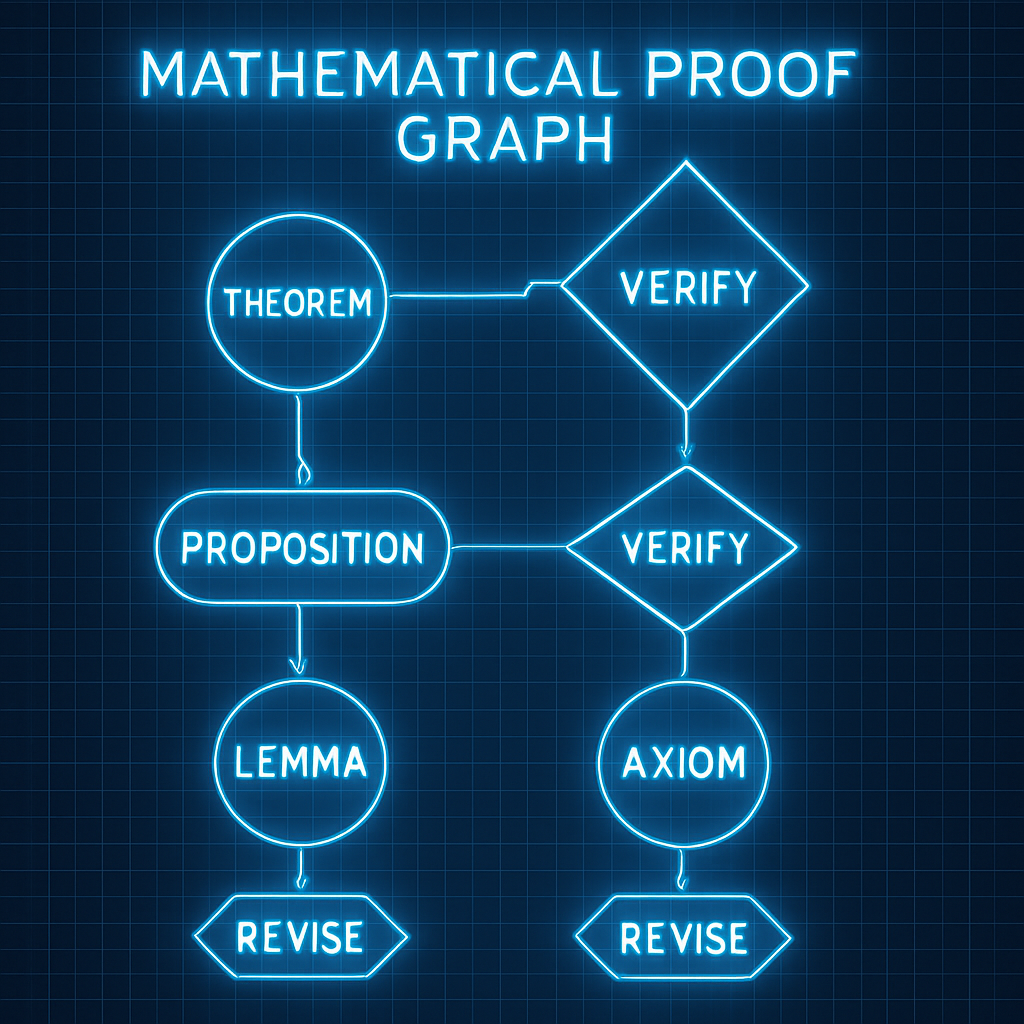

We’ve all seen models that sound confident and still miss critical details. Aletheia’s value is the structure behind the answer. Instead of one-pass generation, it runs a loop where output quality is earned through verification. This is closer to how elite teams actually work: hypotheses first, red-team second, publication third.

What Builders Should Copy Immediately

- Separate generator from verifier: do not let one model grade its own homework.

- Create explicit failure checks: force outputs through contradiction and evidence tests.

- Log iteration history: teams need traceability, not just final text.

- Reward correction loops: better to revise once than fail silently in production.

What This Means for the Next 12 Months

If this pattern keeps scaling, we’ll see autonomous research agents move into specialized verticals first: materials, cybersecurity, finance, logistics, and applied science. The winners won’t be the teams with the loudest demos — they’ll be the teams with the best process hygiene.

As a veteran founder, this maps perfectly to mission execution: clear objective, clean feedback loops, after-action review, then improve. Same principle, different battlefield.

Bottom line: Aletheia matters because it brings research-grade workflow discipline into mainstream AI design. If you’re building for production, copy the loop — not the hype.

Want more practical breakdowns like this? Follow the blog and share this with a builder who’s still treating AI as autocomplete.

Was this article helpful?

Stay ahead of the curve

Get the latest insights on defense tech, AI, and software engineering delivered straight to your inbox. Join our community of innovators and veterans building the future.

Related Articles

AI Agents Under KPI Pressure: The 30-50% Ethics Violation Rate

A December 2025 benchmark shows frontier AI agents ditch ethics 30-50% of the time when KPIs conflict. A veteran-owned dev shop's take on hardening agents for production, and why safety is reliability.

OpenAI Frontier and the Enterprise Agent Shift

OpenAI just launched Frontier, a platform for building and managing AI agents in production. It puts governance, onboarding, and execution at the center of enterprise adoption.

Comments (0)

Leave a comment

No comments yet. Be the first to share your thoughts!